I’m going to be excommunicated from Agility for this.

But, in this article, I’m going to propose a way to leverage a maturity level assessment based on a relative estimation that will increase the ease of working between cells/squads/pods/teams in an Enterprise Environment by a significant degree.

How am I going to do it?

It relies a lot on groundwork and consensus.

What do I mean? Let me explain.

The Assumptions

We all know how to do an estimation via Planning Poker in Scrum, right? I’m going to assume that yes, we do. Also, I’m going to assume that you’re following a system based on the Scrum Bible at scrum.org, in an Enterprise setting. You have cells doing work in User Stories, Features and Epics, in two-week Sprints.

You’re also using User Story Points to measure them in the usual changed Fibonacci: 0.5, 1, 2, 3, 5, 8, 13, 20, 40, 100.

Do this make sense? If not, tweak it to your preferred values.

The Consensus

We need to set a consensus. It’s going to be arbitrary, but it will help have a frame of reference.

Our consensus is twofold: we can set all the Sprints for two weeks. We will also do this: we’ll assume that 8 User Story Points are the maximum that a single person can produce in a Sprint. Taking this into account, 8 USPs are two weeks. 3 USPs is roughly one week, 5 means a week and some days, 2 more than a couple of days but less than a week and 1 a couple days at most.

Yes, these are rough estimates; that’s the idea. We cannot, at the estimation point, be so precise that we zero in an exact number of hours. When we will know how much it costed? We will know how much the User Story costed after we finish it.

As a bonus point, we can come back later on to check on the cost of the User Story later on.

So, if we follow the usual distribution for Scrum, we can think of a User Story as something that’s going to be taken care in one Sprint (ideally). Therefore, for a 5 person squad/cell, the maximum ideal length of a User Story should be 40 USP (8 USP per person times five).

If we use the 3 Sprints for a release, then Features will be in the 100-120 USPs range (40 times three). And Epics, being worked within quarters, will probably be between 120-240 USPs.

(of course, adjust to size and capacity)

Where does this leaves us? We can use it to check that User Stories, Features, and Epics are estimated, sized, and split correctly.

The Prompt-craft

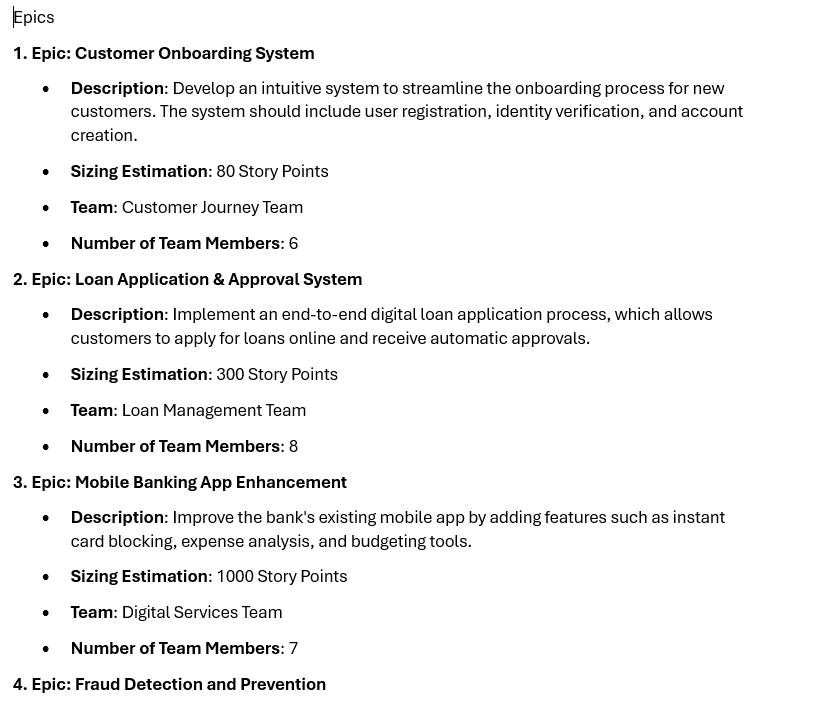

This is going to take some work. I’ve created a list of Epics, Features and User Stories.

You can download it here.

Now, let’s see if we can get the AI to do the work for us. But this is going to take some explaining.

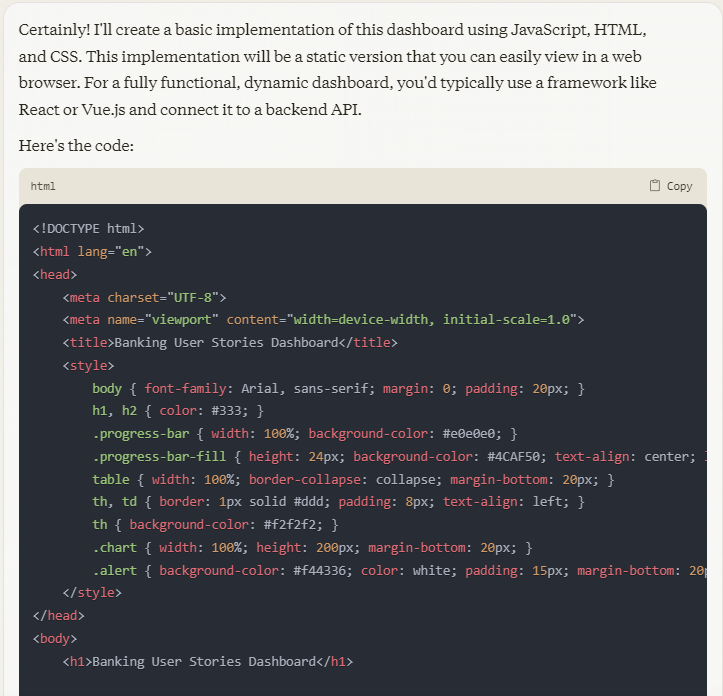

I’m going to use ChatGPT (on version 4o with Canvas) to get it to understand what we want.

I am an Agile Coach trying to understand if the Epics, Features and User Stories at my work are correctly sized. For that, I have reached this consensus in which a person can only produce 8 User Story Points per Sprint, and a user Story can only take one Sprint. Therefore, the maximum a User Story can be sized is 40 User Story Points for a Team of 5. I also have reached a consensus that a Feature should be produced between one Sprint and three Sprints, therefore, between 40 User Story Points and 120 User Story Points for a team of 5. And an Epic is at most Two Features, that are produced each Quarter, so between 80 and 240 User Story Points for a team of Five. I have the following list of Epics, Features and User Stories. Can you analyze it and let me know which Epics, Features and User Stories are mis-sized? Take into account the team size relative to the sizing. Write an output explaining those who are not acceptable.

Whew! That was a mouthful, wasn’t it? But we can see that we use the role-playing that we introduced last time and now we’re introducing another concept: assumptions.

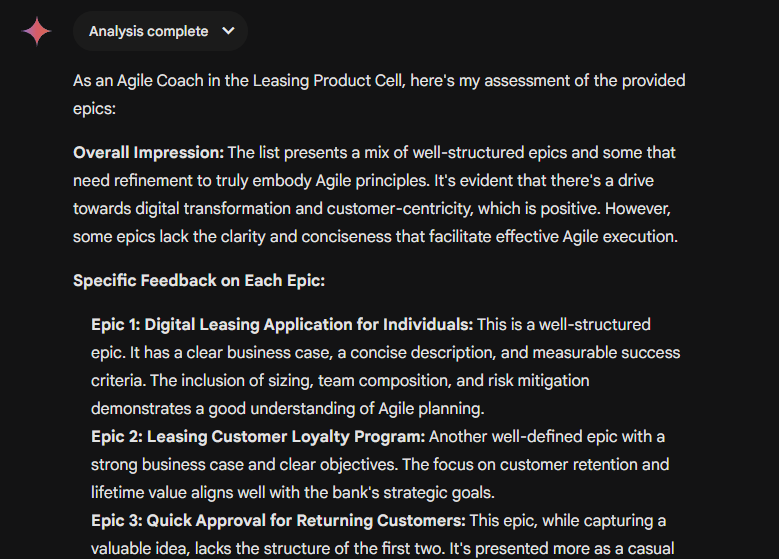

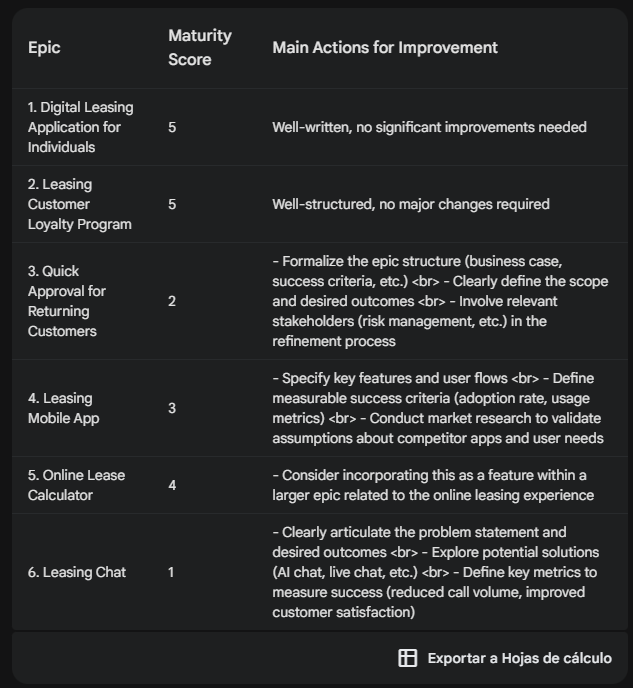

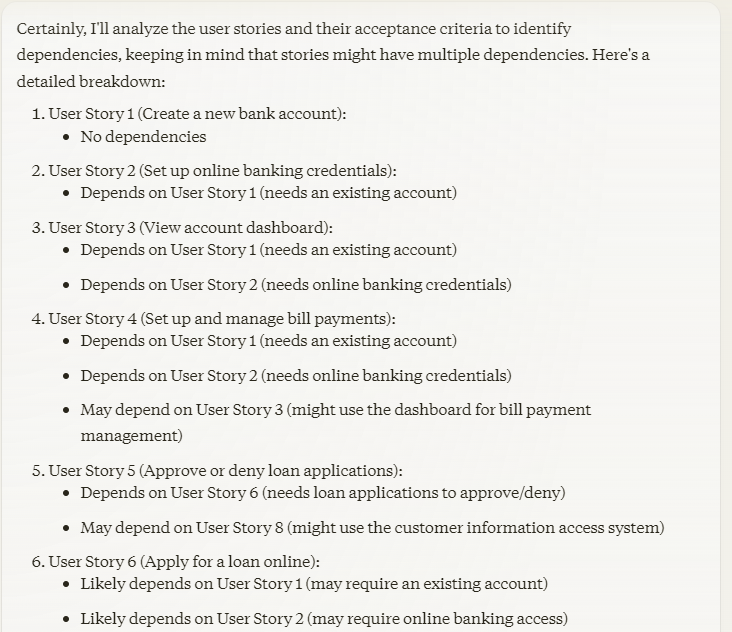

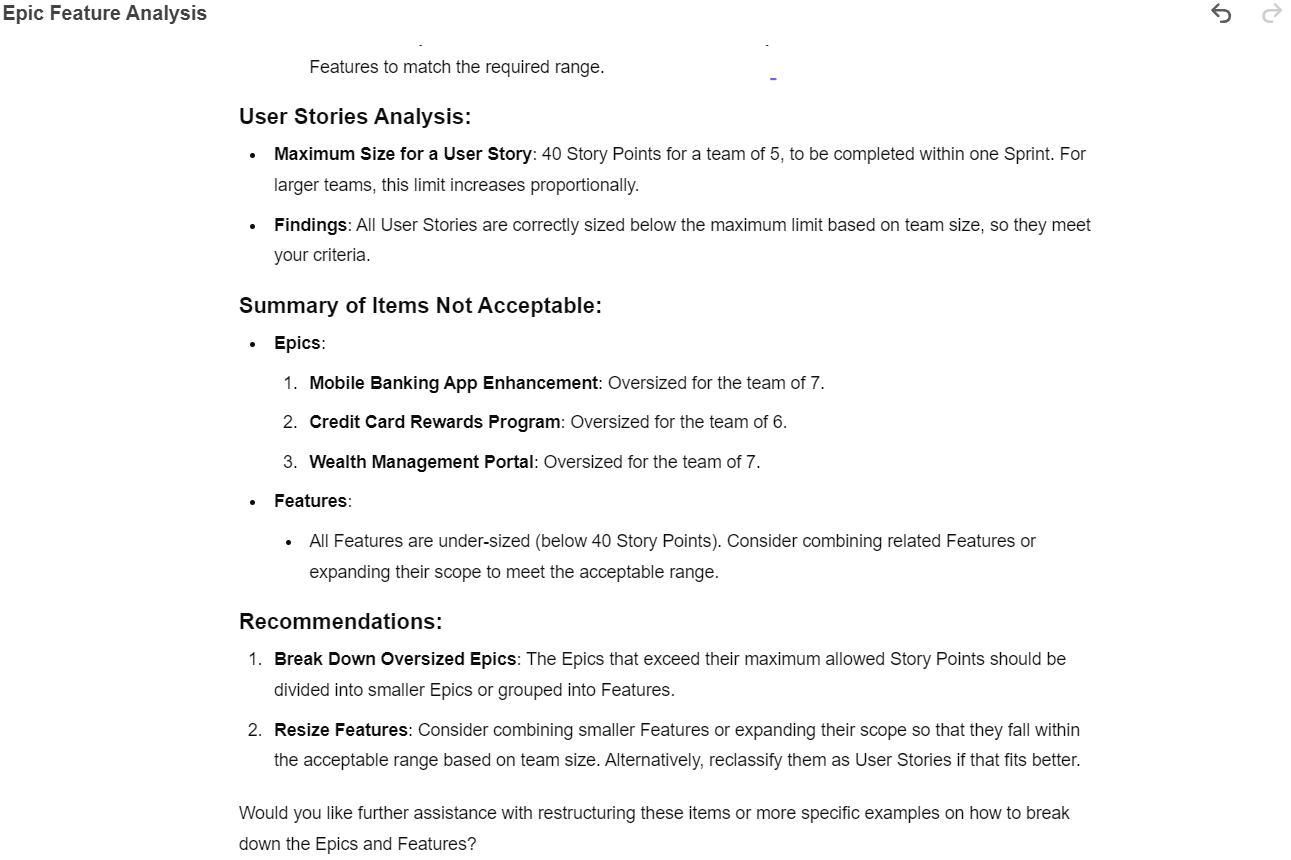

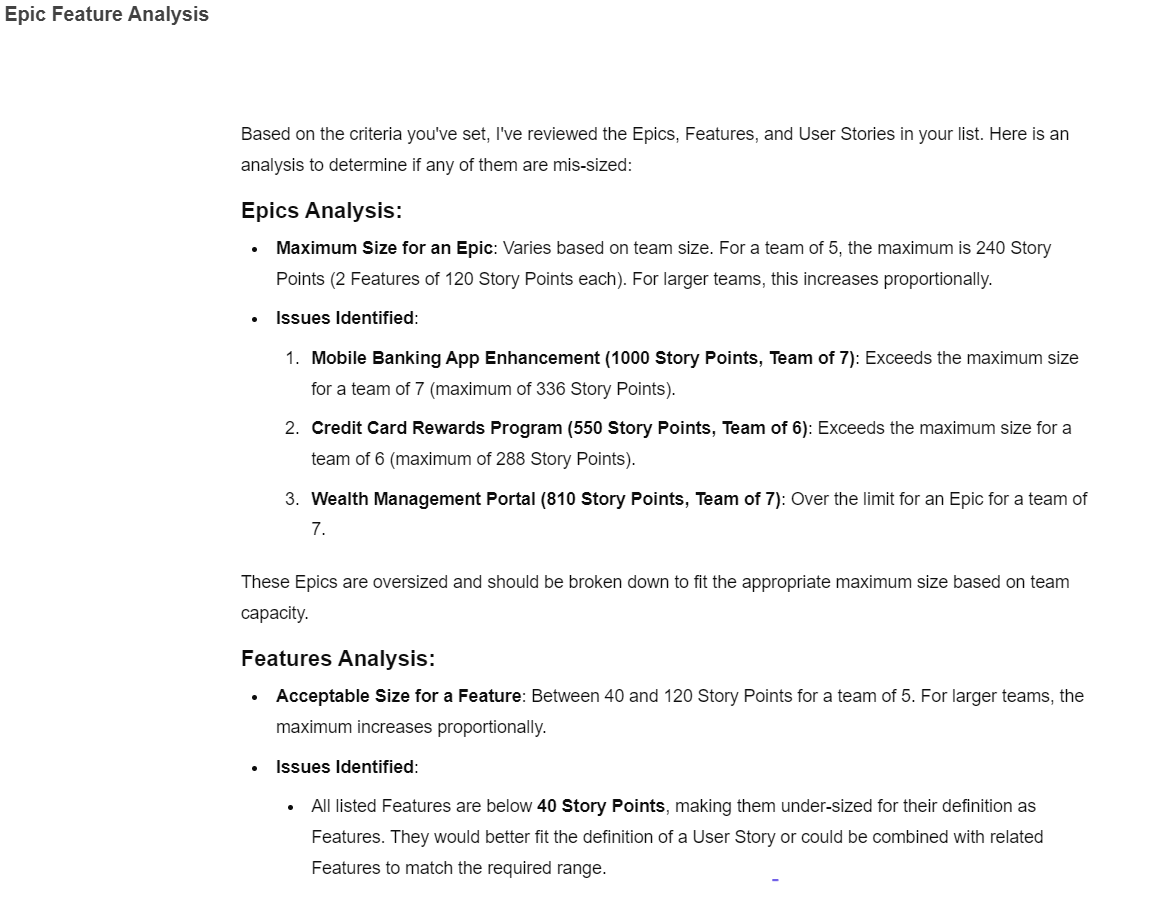

What does this produce?

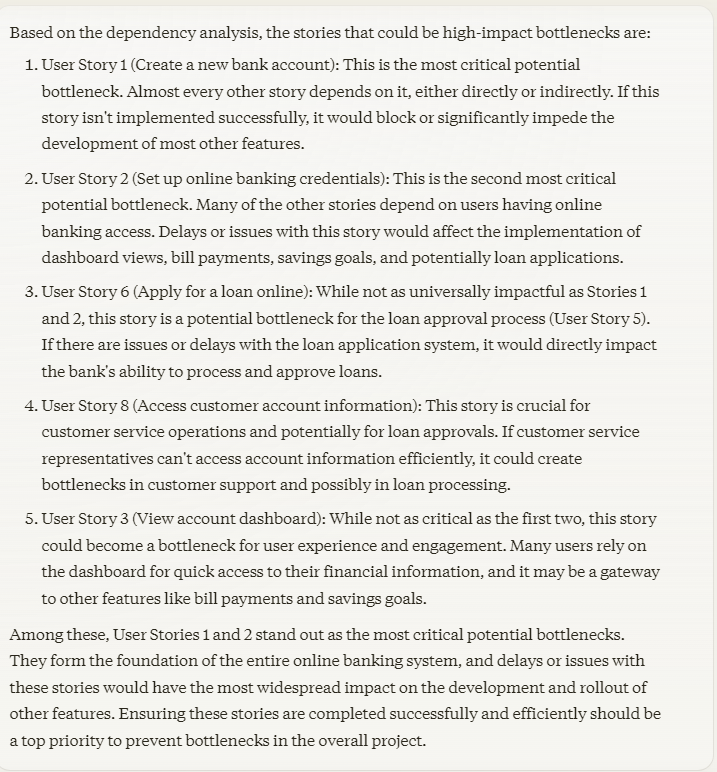

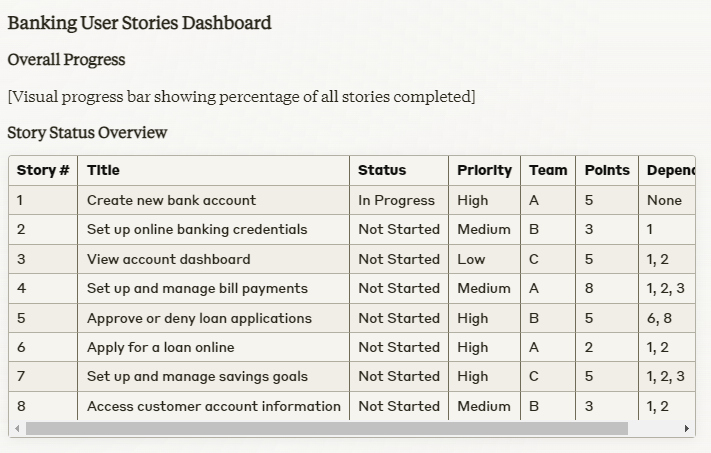

Not only a list of the specific issues, and recommendations…

…but a list of general recommendations.

This produces a workable, actionable list of our next focus.

If we run this, we can have some idea of the maturity of our Backlog in terms of sizing.

Hope this is useful and let me know how it works!

#aiaa #ai #agile #backlog #automation